Secure your Microsoft Sentinel playbooks with managed identities

… and stop using SPNs and workspace keys

Introduction

One of the many great features of Microsoft Sentinel are its ‘SOAR’ capabilities. By using playbooks based on Azure Logic App technology we can automate a lot of tasks to improve the efficiency of our SOCs. I have already covered this topic a couple of times in earlier articles.

While creating these Logic App workflows we might tend to just slab a couple of steps and connectors together and call it a day. But!

“with great power comes great responsibility” — Stan Lee.

So, you might want to make sure that each of your connectors authenticate with least privilege in mind. And what about those passwords or secrets? I wrote earlier about implementing automatic key rotation. But the amount of workflows can increase very quickly. How to keep up with managing all those individual identities?

Well, Logic App workflows do support managed identity. And in this article I’d like to guide you through four connectors, of wich I think they’re the most popular ones used in the field, and on how to make their authentications managed identity-based. Unfortunately this isn’t always as straightforward as you’d think. So I hope by sharing my experiences others won’t need to reinvent the wheel themselves…

Playbooks, connectors and API connections

Each of your playbooks starts with a Sentinel trigger. And here already lies our first authentication challenge. And there will also be several steps you use afterwards:

- Microsoft Sentinel (incident or alert trigger)

- Log Analytics query (Azure Monitor logs connector)

To query the workspace and to gather additional evidence. - Retrieve external data by querying API’s i.e. Azure Graph or Defender for Cloud (by using the HTTP connector)

- Run advanced hunting query against Microsoft 365 Defender (the connector is still called ‘Microsoft Defender ATP’)

- Perform device isolation of live response actions in Microsoft 365 Defender.

Every time you create one of these steps inside the workflow for the first time, you get the chance to decide how this step should handle authentication. Afterwards, an “API connection” Azure resource is created, acting as an authentication link for steps of that connector kind.

By clicking on “Change connection” you have the option to “start over” and create a new API connection:

But unfortunately not every connector available, supports authentication with a managed identity… But I happen to be able to help you out with a few work-arounds! 😉

Managed identity?

Yes, so what are managed identities in the first place?

From the Microsoft’s documentation:

A common challenge for developers is the management of secrets, credentials, certificates, and keys used to secure communication between services. Managed identities eliminate the need for developers to manage these credentials.

While developers can securely store the secrets in Azure Key Vault, services need a way to access Azure Key Vault. Managed identities provide an automatically managed identity in Azure Active Directory for applications to use when connecting to resources that support Azure Active Directory (Azure AD) authentication. Applications can use managed identities to obtain Azure AD tokens without having to manage any credentials.

These have some major benefits over service accounts / app registrations:

- You don’t need to manage credentials. Credentials aren’t even accessible to you.

- You can use managed identities to authenticate to any resource that supports Azure AD authentication, including your own applications.

- Managed identities can be used at no extra cost.

For Logic Apps we have the ability to use both types of managed identities:

- System-assigned managed identity

Here the identity is directly enabled on the resources and tied together to this single resource only. For example a Virtual Machine or in this case, a Logic App. When the Azure resources is deleted, so is the identity tied to it. - User-assigned managed identity

As the name already suggest, these type of managed identities are managed by us, the users, instead of a specific resources. This can be handy if you want to have a single identity and use it from multiple different resources.

In most cases the former is perfect, especially when you make use of automated deployment pipelines. After you deploy your Azure resources, the pipeline can make sure that the proper role assignment for the corresponding identities is taken care of.

But in a few cases a user-assigned managed identity might make more sense. More on this later…

Sentinel incident/alert trigger

This one is the easiest. Once managed identity authentication is configured (by either enabling or selecting one) the Sentinel trigger can be configured using a managed identity.

The only step that remains is that this particular identity needs to be granted RBAC permissions on the Sentinel workspace. Otherwise this playbook can't interact with incidents for example for performing add comments, change assigments and close/re-open tasks.

Deploy via ARM template

You can also deploy your Logic App workflow and API connections used for your connectors from an ARM template.

You’ll need to make sure that the API connection has a property parameterValueType of Alternative:

"resources": [

{

"type": "Microsoft.Web/connections",

"apiVersion": "2016-06-01",

"name": "api-connection-azuresentinel-mi",

"location": "[parameters('location')]",

"properties": {

"displayName": "api-connection-azuresentinel-mi",

"customParameterValues": {},

"parameterValueType": "Alternative",

"api": {

"id": "[concat('/subscriptions/', subscription().subscriptionId, '/providers/Microsoft.Web/locations/', parameters('location'), '/managedApis/azuresentinel')]"

}

}

}

]And for the workflow you need to enable the system-assigned managed identity:

"resources": [

{

"type": "Microsoft.Logic/workflows",

"apiVersion": "2016-10-01",

"name": "[parameters('workflowName')]",

"location": "[parameters('location')]",

"dependsOn": [

"api-connection-azuresentinel-mi"

],

"identity": "[variables('enableSystemIdentity')]",

"properties": {

"state": "[variables('workflowEnabled')]",

"definition": {},

"parameters": {}

}

}

]And lastly, within your worflow definition itself, you’ll need to specify the proper parameters so that the workflow knows to use the managed identity for that particular connection:

"parameters": {

"$connections": {

"value": {

"azuresentinel": {

"connectionId": "[resourceId('Microsoft.Web/connections', 'api-connection-azuresentinel-mi')]",

"connectionName": "api-connection-azuresentinel-mi",

"id": "[concat('/subscriptions/', subscription().subscriptionId, '/providers/Microsoft.Web/locations/', resourceGroup().location, '/managedApis/azuresentinel')]",

"connectionProperties": {

"authentication": {

"type": "ManagedServiceIdentity"

}

}

}

}

}

}Query (Sentinel) workspace

The most common step I see used very often is the Azure Monitor connector based one. By performing additional KQL queries against the workspace it’s possible to perform automated triage and hopefully auto-close the incident afterwards.

But this one unfortunately, doesn't come with support for managed identity

Luise Freese wrote an excellent blog about this subject earlier. She opted for using an HTTP step instead (which DOES support managed identity) and retrieve the query results from the Log Analytics API:

Great idea! But when trying this out myself, I encountered issues with the way the response is formatted by the API . It will return all of the columns and datatypes first, followed by all values separately:

{

"tables": [

{

"name": "PrimaryResult",

"columns": [

{

"name": "TimeGenerated",

"type": "datetime"

},

{

"name": "ResourceId",

"type": "string"

},

{

"name": "OperationName",

"type": "string"

},

{

"name": "Category",

"type": "string"

},

{

"name": "ResultType",

"type": "string"

},

{

"name": "UserPrincipalName",

"type": "string"

},

{

"name": "Location",

"type": "string"

}

],

"rows": [

[

"2022-12-12T13:59:03.841Z",

"/tenants/<tenantid>/providers/Microsoft.aadiam",

"Sign-in activity",

"SignInLogs",

"0",

"user@domain.com",

"US"

]

]

}

]

}I was unable to compose a neat html table from these results because I needed a simple array for this to work. And I couldn’t find a away to compose one easily within the Logic App workflow itself.

Unfortunately there’s no way to run PowerShell code inside a Logic App workflow. Because the

Invoke-AzOperationalInsightsQuerycmdlet appeared to come back with the results in the perfect format.

The response I was looking for should look something like this instead:

{

"TimeGenerated": "2022-12-12T13:59:03.841Z",

"ResourceId": "/tenants/<tenantid>/providers/Microsoft.aadiam",

"OperationName": "Sign-in activity",

"Category": "SignInLogs",

"ResultType": "0",

"UserPrincipalName": "user@domain.com",

"Location": "US"

}In Luise’s case, she used the API response to compose a new API request for creating items in a Sharepoint list. And there the format of the output didn’t matter because apparently Sharepoint expected this format as an input.

Teamwork

While I was still trying to figure out a solution to this problem, my dear friend and colleague (and fellow watch-nerd! 👌🏻) Jasper Minnaert joined our team. He also couldn’t fathom that something this “simple” wasn’t possible. And what’s really great about getting to expand a team, is that it comes with a fresh breeze of new ideas and creativity! So quickly Jasper came up with couple of new ideas and applying different techniques I haven’t even heard about!

It never stops to amaze me; although we work with Azure resources and other Microsoft Cloud solutions on a daily basis, we think we know something by now, but we never know everything. Things literally evolve over night!

Liquid templates

The idea was to make use of Liquid template language to render the conversion outside of the Logic App workflow.

Liquid was originally developed by Shopify, a one-stop platform for building webshops, and is now available as an open source project on GitHub. It can be used to render a source document (xml/json) to produce an output document (xml/json/text/html). Today, it’s used in many different software projects, from content management systems to static site generators — and of course, Shopify.

Deploy Integration account

To make use of Liquid template functionality within a Logic App workflow, we need to deploy an Integration Account in Azure and select it within the workflow settings:

Create Liquid map

Next, is creating a so called map in which the transformation is defined. For our current use-case we can make use of the following Liquid template:

{% assign rows = content.tables[0].rows %}

{% assign columns = content.tables[0].columns %}

{% assign index = columns.size | minus: 1 %}

[

{% for row in rows %}

{

{% for num in (0..index) %}

{% if num == index %}

{% break %}

{% endif %}

'{{columns[num].name}}': '{{row[num]}}',

{% endfor %}

},

{% endfor %}

]Save this as a .liquid file and upload it as a new Map within your integration account:

Update workflow

Within your workflow add a “Liquid” step and choose “Transform JSON to JSON”. Within this step you’ll need to specify the map you’ve created inside your integration account:

If all went well we’re greeted by a nice new version of our API response, which can easily be converted into an HTML table:

Great find Jasper! 🙏🏻

Query external APIs with HTTP

In quite a few cases you need to retrieve data from an external API. In the previous steps we’ve already seen an example to query Log Analytics, but there are countless other examples of Azure and Microsoft APIs.

I’ve written about integrating VirusTotal and Defender for Cloud in the past as well.

But there’s something specific I’d like to point out here when you go about and try to authenticate with managed identities on certain Microsoft API’s.

In the case of VirusTotal you athenticate with a key managed externally and you provide this key as part of your request. But for many Microsoft API’s the permissions required are managed through Azure Active Directory.

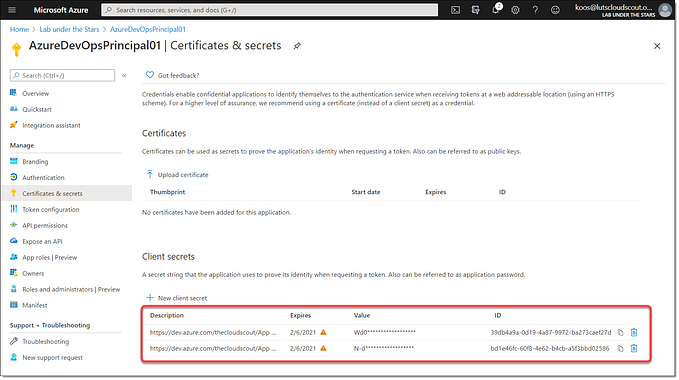

Normally, when you create an app registration you have the ability to grant Microsoft Graph or other specific API permissions to that application. But since our managed identities are considered “Enterprise Applications”, the Azure portal will not provide an option to configure these permissions!

We need to resort to external tools like scripting against the Azure AD API or make use of the New-AzureAdServiceAppRoleAssignment PowerShell cmdlet.

Setting up permissions with PowerShell

First, we need to know what specific permissions need to be assigned to the enterprise application/managed identity.

I find the easiest way to open up the “App registrations” blade in Azure Active Directory, select a random application and go into “API permissions”

Here you can browse through all of the available Application permissions:

It’s important to note down the “Application (client ID)” and the exact permission name.

Here are some examples:

- For actions towards Azure Active Directory, such as list group members, reset password or change roles, you’ll need to delegate “Microsoft Graph” permissions. Which has an application ID of

00000003-0000-0000-c000-000000000000and some example permissions areUser.Read.AllorGroup.ReadWrite.All. - To interact with Microsoft 365 Defender you might need two different application ID’s. “WindowsDefenderATP”

fc780465-2017-40d4-a0c5-307022471b92with permissions such asAlert.ReadandMachine.Isolate. But permissions likeAdvancedHunting.Read,CustomDetections.ReadWriteandIncident.Readare part of "Microsoft Threat Protection" with ID8ee8fdad-f234-4243-8f3b-15c294843740.

Use the PowerShell script below to assign the permissions you need for your playbooks. Replace the variables to match your tenant ID and Logic App workflow name. The script will retrieve the corresponding managed identity/enterprise application and will go through a few loops to grant every permission to that identity provided in the three variables op top.

# Your tenant id (in Azure Portal, under Azure Active Directory -> Overview )

$TenantID = "<tenantID>"

# Name of the manage identity (same as the Logic App name)

$DisplayNameOfMSI = "logic-soar-01"

# Microsoft Graph App ID (DON'T CHANGE)

$GraphAppId = "00000003-0000-0000-c000-000000000000"

$DefenderAppId = "fc780465-2017-40d4-a0c5-307022471b92"

$Threathprotectionid = "8ee8fdad-f234-4243-8f3b-15c294843740"

# Provide permissions to be granted for each application

$GraphPermissions = @(

"User.Read.All"

)

$DefenderPermissions = @(

"AdvancedQuery.Read",

"Alert.Read",

"Ip.Read.All",

"Machine.Isolate",

"Machine.LiveResponse",

"Machine.Read.All"

)

$ThreatIntelPermissions = @(

"AdvancedHunting.Read",

"Incident.Read"

)

# Install the module (You need admin on the machine) also only works with native PowerShell not PowerShell Core!

# Install-Module AzureAD

# Connect to Tenant

Connect-AzureAD -TenantId $TenantID

$MSI = (Get-AzureADServicePrincipal -Filter "displayName eq '$DisplayNameOfMSI'")

$GraphServicePrincipal = Get-AzureADServicePrincipal -Filter "appId eq '$GraphAppId'"

$DefenderServicePrincipal = Get-AzureADServicePrincipal -Filter "appId eq '$DefenderAppId'"

$ThreatIntelServicePrincipal = Get-AzureADServicePrincipal -Filter "appId eq '$Threathprotectionid'"

Write-Host "Microsoft Graph" -ForegroundColor Yellow

foreach ($Permission in $GraphPermissions) {

Write-Host "Assigning $($Permission) to $($DisplayNAmeOfMSI)..." -ForegroundColor DarkGray

$AppRole = $GraphServicePrincipal.AppRoles | Where-Object { $_.Value -eq $Permission -and $_.AllowedMemberTypes -contains "Application" }

New-AzureAdServiceAppRoleAssignment -ObjectId $MSI.ObjectId -PrincipalId $MSI.ObjectId -ResourceId $GraphServicePrincipal.ObjectId -Id $AppRole.Id | out-null

}

Write-Host "WindowsDefenderATP" -ForegroundColor Yellow

foreach ($Permission in $DefenderPermissions) {

Write-Host "Assigning $($Permission) to $($DisplayNAmeOfMSI)..." -ForegroundColor DarkGray

$AppRole = $DefenderServicePrincipal.AppRoles | Where-Object { $_.Value -like "$($Permission)*" -and $_.AllowedMemberTypes -contains "Application" }

New-AzureAdServiceAppRoleAssignment -ObjectId $MSI.ObjectId -PrincipalId $MSI.ObjectId -ResourceId $DefenderServicePrincipal.ObjectId -Id $AppRole.Id | out-null

}

Write-Host "Microsoft Threat Intel" -ForegroundColor Yellow

foreach ($Permission in $ThreatIntelPermissions) {

Write-Host "Assigning $($Permission) to $($DisplayNAmeOfMSI)..." -ForegroundColor DarkGray

$AppRole = $ThreatIntelServicePrincipal.AppRoles | Where-Object { $_.Value -like "$($Permission)*" -and $_.AllowedMemberTypes -contains "Application" }

New-AzureAdServiceAppRoleAssignment -ObjectId $MSI.ObjectId -PrincipalId $MSI.ObjectId -ResourceId $ThreatIntelServicePrincipal.ObjectId -Id $AppRole.Id | out-null

}Ideally you want to make this part of your automated deployment pipeline where you also deploy the Logic App workflows themselves.

Although we weren’t able to assign permissions from the Enterprise applications blade, it DOES show us the permission granted by our PowerShell script:

User-assigned managed identities

The steps above are a perfect example of why a user-defined managed identity might be a better approach when used in conjunction with automated deployment pipelines. Remember that system-assigned managed identities stop to exist once the corresponding Azure resource is removed as well. So, you don't want to go through the hassle of re-assigning permissions every time you replace an Azure resource.

So if you have an environment where you redeploy resources quite often, you might want to consider using user-assignment managed identities specifically for those connections which require API permissions such as the above.

Conclusion

I hope that I was able to spark enough interest in managed identities so that you'll consider migrating away from app registration and other interactive logins.

If you have any follow-up questions, never hesitate to reach out to me!

— Koos